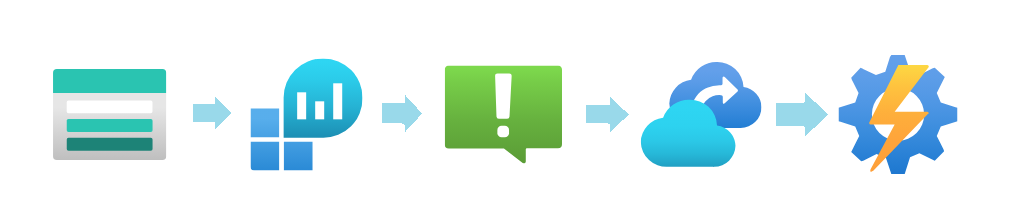

In part 1 of this series we set up an initial backup instance for a storage account that automatically adds all available containers to the backup policy. This time we will setup a chain of events that will make sure the backup instance is updated every time a new container is created. Real continuous backup management!

The Setup

Azure storage accounts have a native event-grid integration that emits messages on certain actions. Unfortunately, creating storage account blob containers is not one of these events. That’s why we need to create a work around using Log Analytics.

- Create a Diagnostic Setting that stores the audit logs of a storage account blob service to a log analytics workspace

- Create an Alert that is triggered by a record in the Log Analytics Workspace

- Create an Action group triggered by the Alert that can an automation runbook

- Create an azure automation account with an identity and the Role Assignement to update a backup instance

- Create an azure cli script to sync the backup instance

Step-by-Step

1. Create the diagnostic setting

Let’s start with an existing storage account, blob service and log analytics workspace. Here we target the audit log of the blob service where events are logged when a new blob container is created. These events will be queryable in the targeted log analytics workspace

resource logAnalyticsWorkspace 'Microsoft.OperationalInsights/workspaces@2023-09-01' existing = {

name:'yasen-la'

}

resource storageAccount 'Microsoft.Storage/storageAccounts@2023-05-01' existing = {

name: 'yasen-archive'

}

resource blobService 'Microsoft.Storage/storageAccounts/blobServices@2021-06-01' existing = {

parent: storageAccount

name: 'default'

}

//Create the diagnostic setting

resource diagnosticsBlob 'Microsoft.Insights/diagnosticSettings@2021-05-01-preview' = {

scope: blobService

name: 'diagnostics00'

properties: {

workspaceId: logAnalyticsWorkspace.id

logs: [

{

category: 'audit' // We need the blob service audit log

enabled: true

}

]

}

}

2. Create a alert

Now that our audit logs are being stored we can create an alert that watches it.

resource alert 'microsoft.insights/scheduledqueryrules@2024-01-01-preview' = {

name: 'BlobContainerCreated'

location: 'westeurope'

kind: 'LogAlert'

properties: {

displayName: 'BlobContainerCreated'

severity: 3

enabled: true

evaluationFrequency: 'PT15M' //Will run every 15 minutes

scopes: [

logAnalyticsWorkspace.id

]

targetResourceTypes: [

'Microsoft.OperationalInsights/workspaces'

]

windowSize: 'PT15M'

overrideQueryTimeRange: 'P2D'

criteria: {

allOf: [

{

//The query below will check if there have been any CreateContainer events in the last 15 minutes

query: 'StorageBlobLogs | where TimeGenerated > ago(15m) and OperationName == \'CreateContainer\''

timeAggregation: 'Count'

dimensions: []

resourceIdColumn: '_ResourceId'

operator: 'GreaterThan'

threshold: 0

failingPeriods: {

numberOfEvaluationPeriods: 1

minFailingPeriodsToAlert: 1

}

}

]

}

autoMitigate: false

actions: {

//When the alert goes off, it will trigger this action group

actionGroups: [

action_group.id

]

}

}

}

In the next step we will setup the action group

3. Create an action group

Here we configure the action group to trigger an automation runbooks webhook.

//Our action group executes a Automation Account Runbook

resource action_group 'microsoft.insights/actionGroups@2024-10-01-preview' = {

name: 'SyncStorageBackup'

location: 'Global'

properties: {

groupShortName: 'SyncSABU'

enabled: true

automationRunbookReceivers: [

{

name: 'Runbook'

useCommonAlertSchema: false

automationAccountId: automationAccount.id

runbookName: 'BackupSync'

webhookResourceId: webhook.id

isGlobalRunbook: false

}

]

}

}

In the next step we will setup the runbook and it’s webhook.

4. The Automation Runbook and it’s Webhook

In order for us to run our sync code, we need to setup an automation runbook to an existing automation account.

//Note: your automation account should have a managed identity and the Backup Operator role on your Backup Vault

resource automationAccount 'Microsoft.Automation/automationAccounts@2024-10-23' existing = {

name:'yasen-automation'

}

//Here we declare the runbook

resource runbook 'Microsoft.Automation/automationAccounts/runbooks@2023-11-01' = {

parent: automationAccount

name: 'BackupSync'

location: 'westeurope'

properties: {

description: 'Sync Backpu instance'

logVerbose: false

logProgress: false

logActivityTrace: 0

runbookType: 'PowerShell72' //The runtime is important for Azure CLI support

}

}

//The Runbook will also need a webhook

//If we ever need to reuse the runbook for a differnt backup situation we can create a new webhook

resource webhook 'Microsoft.Automation/automationAccounts/webhooks@2024-10-23' = {

parent: automationAccount

name: 'AlertWebhook'

properties: {

isEnabled: true

parameters: {

STORAGEACCOUNTNAME:'yasenarchive'

STORAGEACCOUNTRESOURCEGROUP: 'archive-rg'

BACKUPINSTANCENAME:'storage-backup-instance-somefunkycode'

BACKUPINSTANCERESOURCEGROUP:'backup-rg'

BACKUPVAULTNAME:'testvault'

}

runbook: {

name: runbook.name

}

}

}

This Webhook will only be triggered by the alert so we can pre-bake some parameters Watch out! the ‘backup instance name’ isn’t the human-readable display name seen in the azure portal. Get the real name through the json properties panel, azure cli or AzPwsh

5. Finally our script to sync our backup instance

This is a simple two-command setup. Luckily the cli has a nice utility for formulating a config including all containers in a storage account.

param(

[string]$StorageAccountName,

[string]$StorageAccountResourceGroup,

[string]$BackupInstanceName,

[string]$BackupInstanceResourceGroup,

[string]$BackupVaultName

)

az login --identity #Log in using the automation account's identity

# Here we create the configuration we want to set

# A backup instance that includes all he containters

# We just write it to file to avoid encoding issues

az dataprotection backup-instance initialize-backupconfig `

--include-all-containers `

--datasource-type 'AzureBlob' `

--storage-account-name $StorageAccountName `

--storage-account-resource-group $StorageAccountResourceGroup > config.json

# Execute the Update

az dataprotection backup-instance update `

--backup-instance-name $BackupInstanceName ` #Beware! not the simple human readable name in the portal!

-g $BackupInstanceResourceGroup `

-v $BackupVaultName `

--container-blob-list "config.json"

You can manually add this script to the runbook using the portal or checkout the blog about automation on how to do it programatically. You can do it with bicep as well, using deployment scripting, but then it gets a bit silly.

Notes:

Why not just run the runbook on a schedule ?

Yeah you totally could, it depends onyour frequency requirements. The scheduled job is much simpler, but might leave you with a coverage window that isn’t desirable. With this setup you know that your containers are synced regularly

How about removing containers?

You could automate that, just update the alert to also trigger on “DeleteContainer” events and it will trigger the sync which will in turn set the policy to only backup existing containers. This will remove your deleted container from the backup schedule.

Final Thoughts

Why isn’t this an option out of the box? I’m sure that by the time I release this, my solution will be outdated and there’s just a wildcard we can pass or a flag we can tick when creating a backup instance. But for now this is a nice extensible native setup that requires zero maintainence.